In a past life, I used to do a lot of software engineering. I have listed a few open-source projects I started (apart from research codebases). I have presented a few talks at software conferences which are also listed here.

technical reports/mini-projects/school course projects

-

AirLetters: An Open Video Dataset of Characters Drawn in the Air

ECCV HANDS Workshop 2024

-

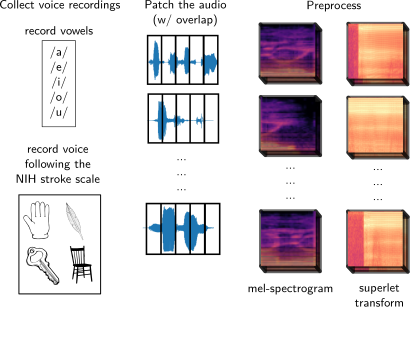

Tuning In: Analysis of Audio Classifier Performance in Clinical Settings with Limited Data

PMLR 2024

-

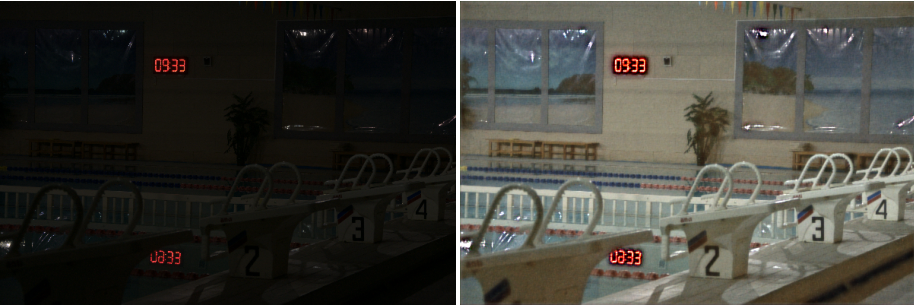

DiffuseRAW: End-to-End Generative RAW Image Processing for Low-Light Images

Rishit Dagli

arXiv preprint arXiv:2402.18575 2023

-

-

CPPE-5: Medical Personal Protective Equipment Dataset

Springer Nature Computer Science 2021

selected software

torch-diffsim

Parallelizable, differentiable, deformable simulation entirely in PyTorch

Simplicits Nerfstudio

Qualitative, speed improvements and a more complete implementation for Simplicits which allows simulating a mesh, Gaussian Splat, or a NeRF.

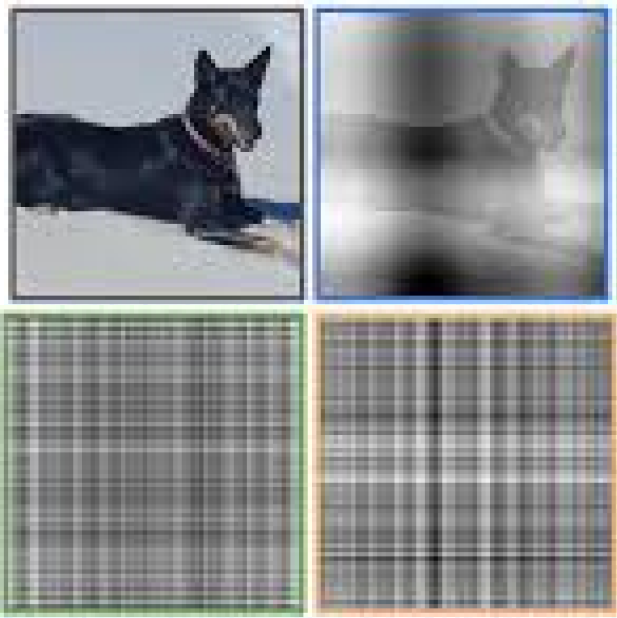

MIRNet-TFJS

TensorFlow JS models for MIRNet for low-light💡 image enhancement that can run entirely on your browser.

(GitHub Trending)

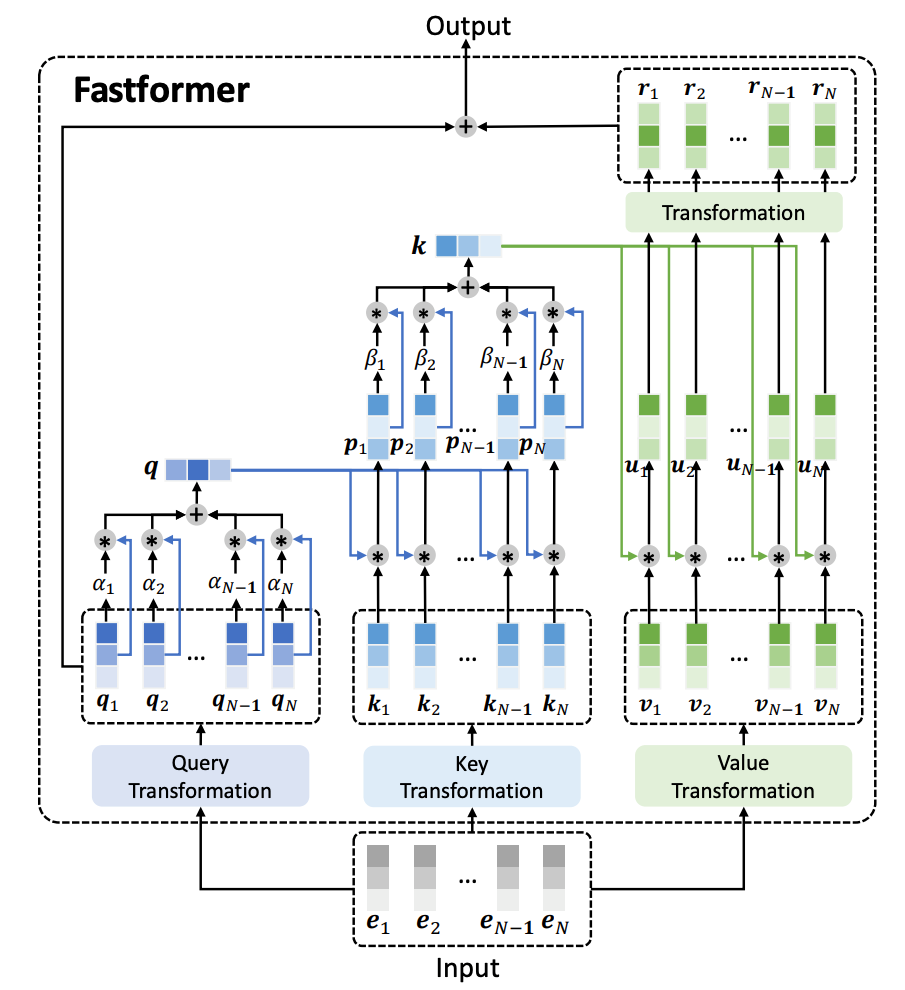

Fast-Transformer

An optimized implementation of Additive Attention.

(GitHub Trending)

3D Transforms

A library to easily work with 3D data and make 3D transformations.

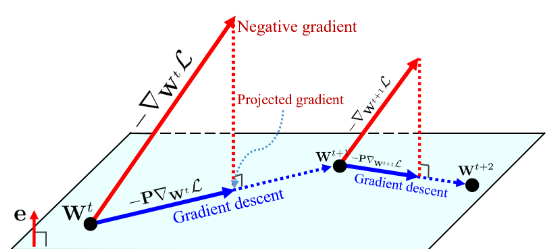

Gradient-Centralization

Instantly improve your training performance by implementing Gradient Centralization in optimizers.

(GitHub Trending)

Perceiver

An optimized implementation of Perceiver.

(GitHub Trending)

Greenathon

Originally a hackathon submission, shows how to train models specifically for deploying them to run entirely on browsers.

(GitHub Trending)

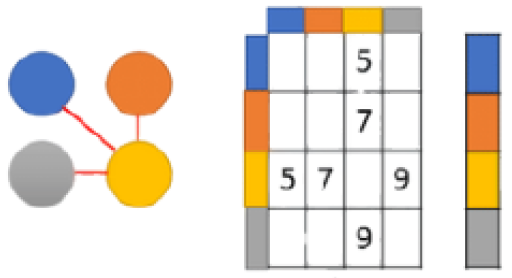

ISAB

A framework to use Permutation-Invariant Neural Networks.

ML With Android 11

Popular samples for optimized inference for machine learning models on Android using TensorFlow Lite using capabilities introduced in Android 11.

(GitHub Trending)

Face-Recognition Flutter

Popular samples for optimized inference for machine learning models on Android using Flutter and Firebase ML Kit.

TF Watcher

A tool to monitor your ML jobs remotely.

(GitHub Trending)

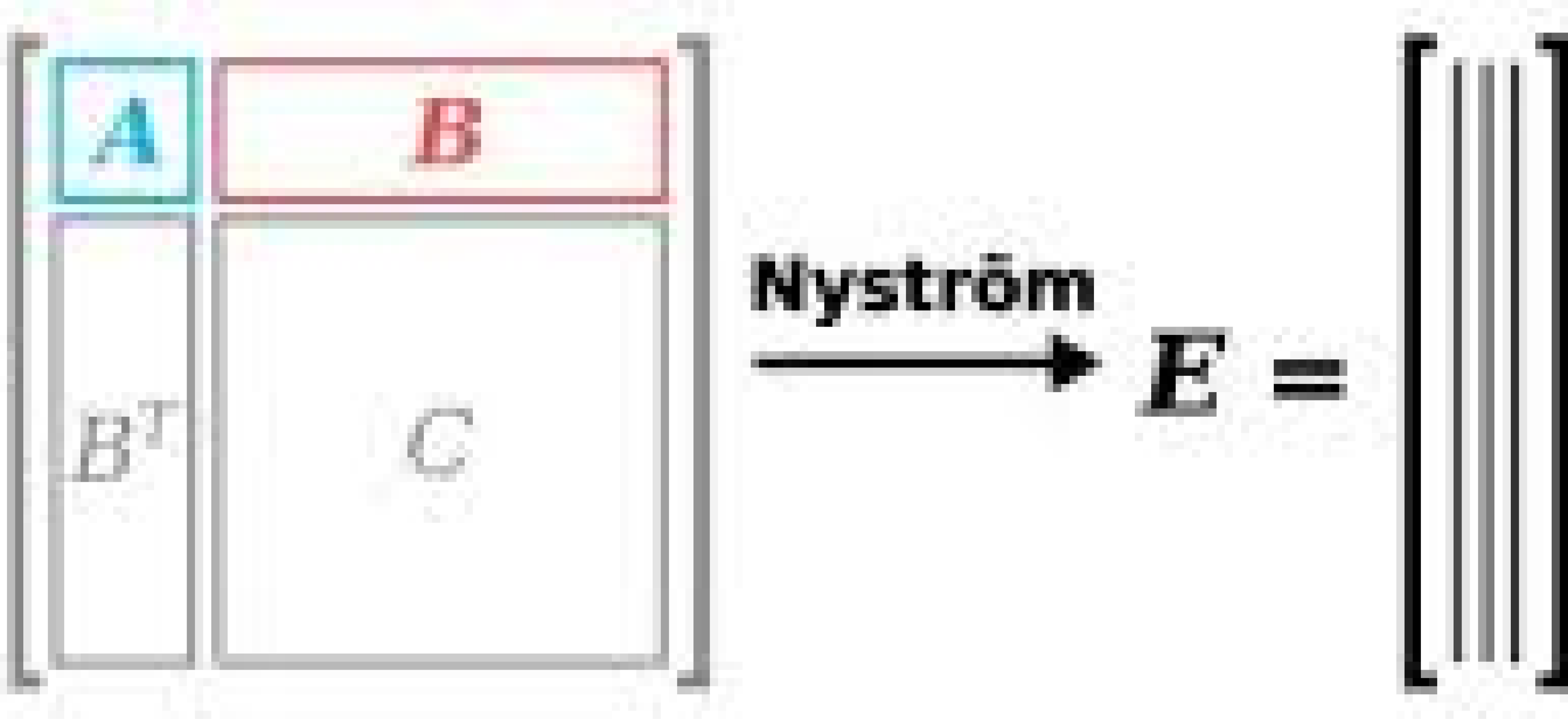

Nystromformer

An optimized implementation of using Nyström Method for approximation self-attention.

Transformer in Transformer

An optimized implementation of performing attention inside local patches for image classification.

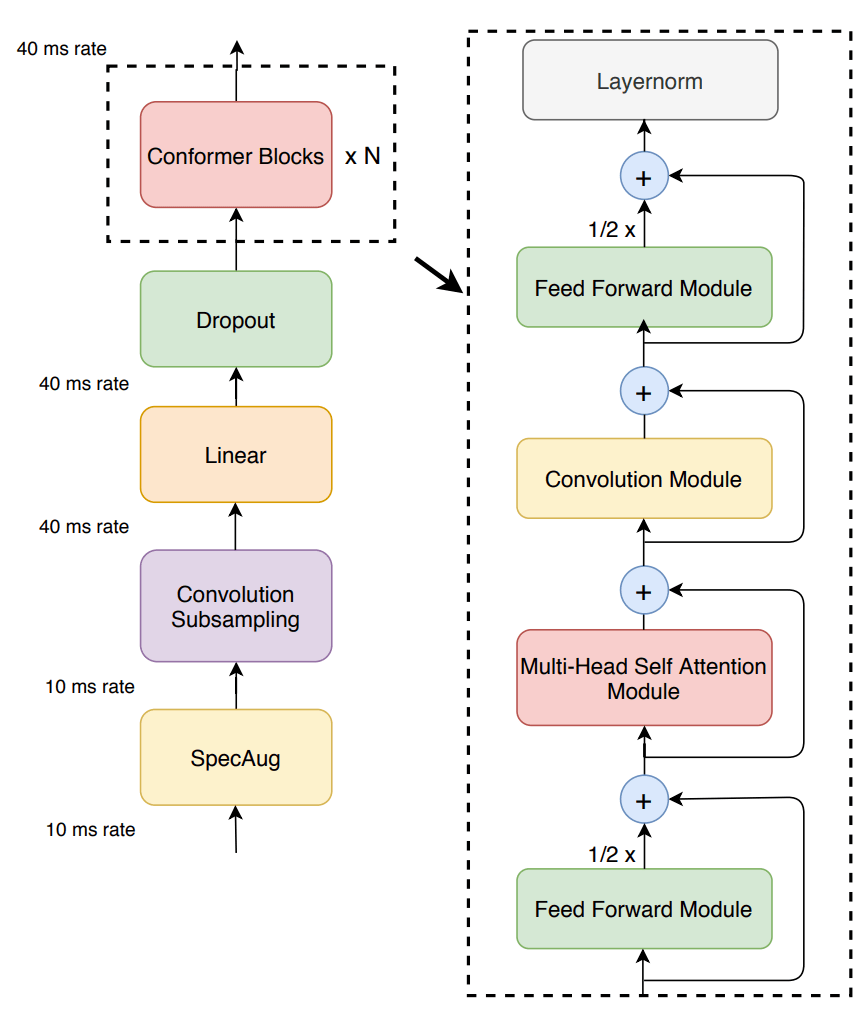

Conformer

One of the first implementations of the popular Conformer.

GLOM

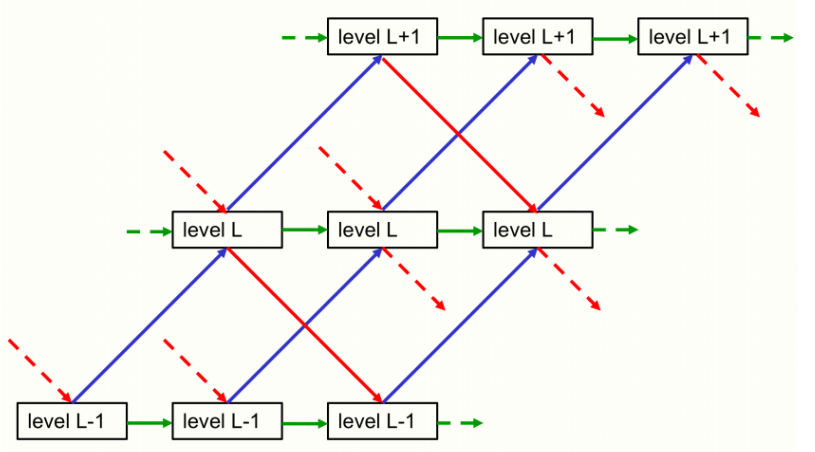

One of the first implementations of the popular Hinton's GLOM with optimization to make it runnable.

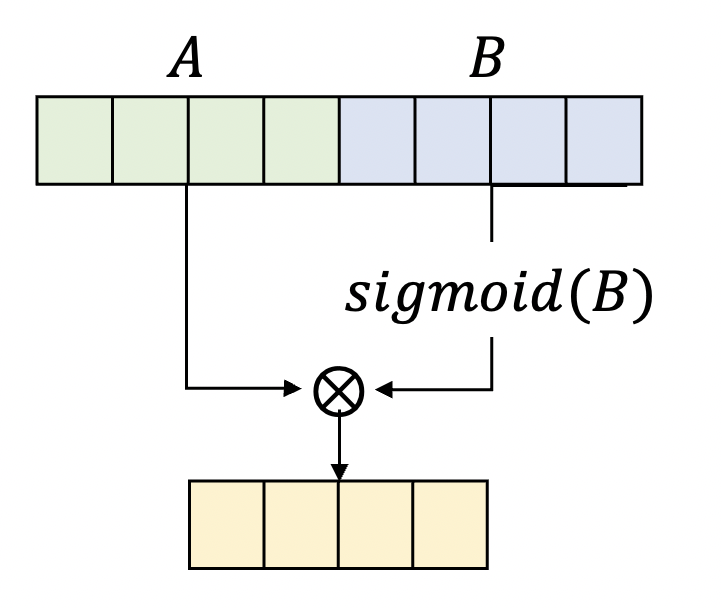

GLU

Gated Linear Units and many of their variants.

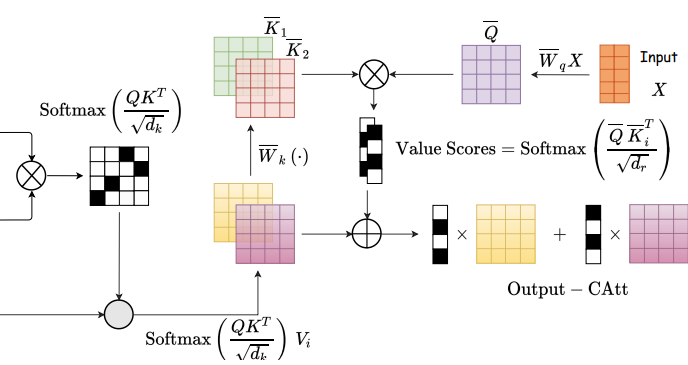

Compositional Attention

An optimized implementation of Compositional Attention and their variants around Disentangling Search and Retrieval.

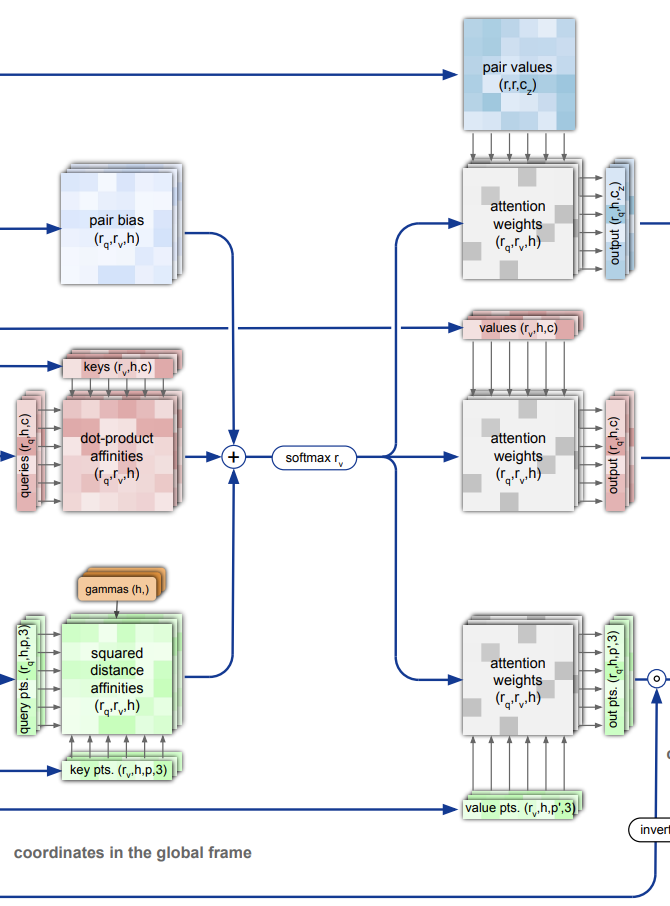

Invariant Point Attention

Invariant Point Attention from AlphaFold 2 for all problems.

Wasm FAAS

A proof of concept to run Machine Learning models as serverless functions with Wasm.

conference talks

AirLetters: An Open Video Dataset of Characters Drawn in the AirECCV HANDS Workshop 2024

AirLetters: An Open Video Dataset of Characters Drawn in the AirECCV HANDS Workshop 2024 CPPE-5: Medical Personal Protective Equipment DatasetSpringer Nature Computer Science 2021

CPPE-5: Medical Personal Protective Equipment DatasetSpringer Nature Computer Science 2021